aidkit's answer to the EU AI Act: Compliance at the speed of innovation

The European Union's AI Act (AIA) is now a legal fact. Regardless of your opinion on the legislation, it offers something invaluable to firms and innovators: legal certainty. Some inventiveness may thrive under the invisible hand, but when safety, and thus liability, are central to an innovation, the steadying hand of oversight is essential.

To help customers enter their new obligations with confidence, neurocat is expanding its aidkit software into a full AI governance solution. Rather than a top-down management software, "aidkit Comply" links your cross-functional units together horizontially. Hereby, you can collect documentation and evidence on your AI systems organically as your teams complete their tasks.

The whole solution is integrated directly into your MLOps and data platforms and thus dynamically updates with every new pipeline, keeping your compliance moving at the speed of your innovation.

What the AI Act means for you

The AI Act’s provisions will come into force in phases over the next two years. Like the GDPR before it, this European legislation will have extra-territorial implications; it will impact any AI developer or provider whose operations touch on the European single market even if they are based elsewhere.

The obligations placed upon firms by the AIA vary based on the risk of a given AI system, which is classified as minimal/none, limited, high, or unacceptable (and thus prohibited). High-risk AI systems, which includes any AI system affecting eight specified areas (falling largely under fundamental rights) and any use case covered by EU harmonization legislation for public safety, will be the subject of the most comprehensive compliance requirements.

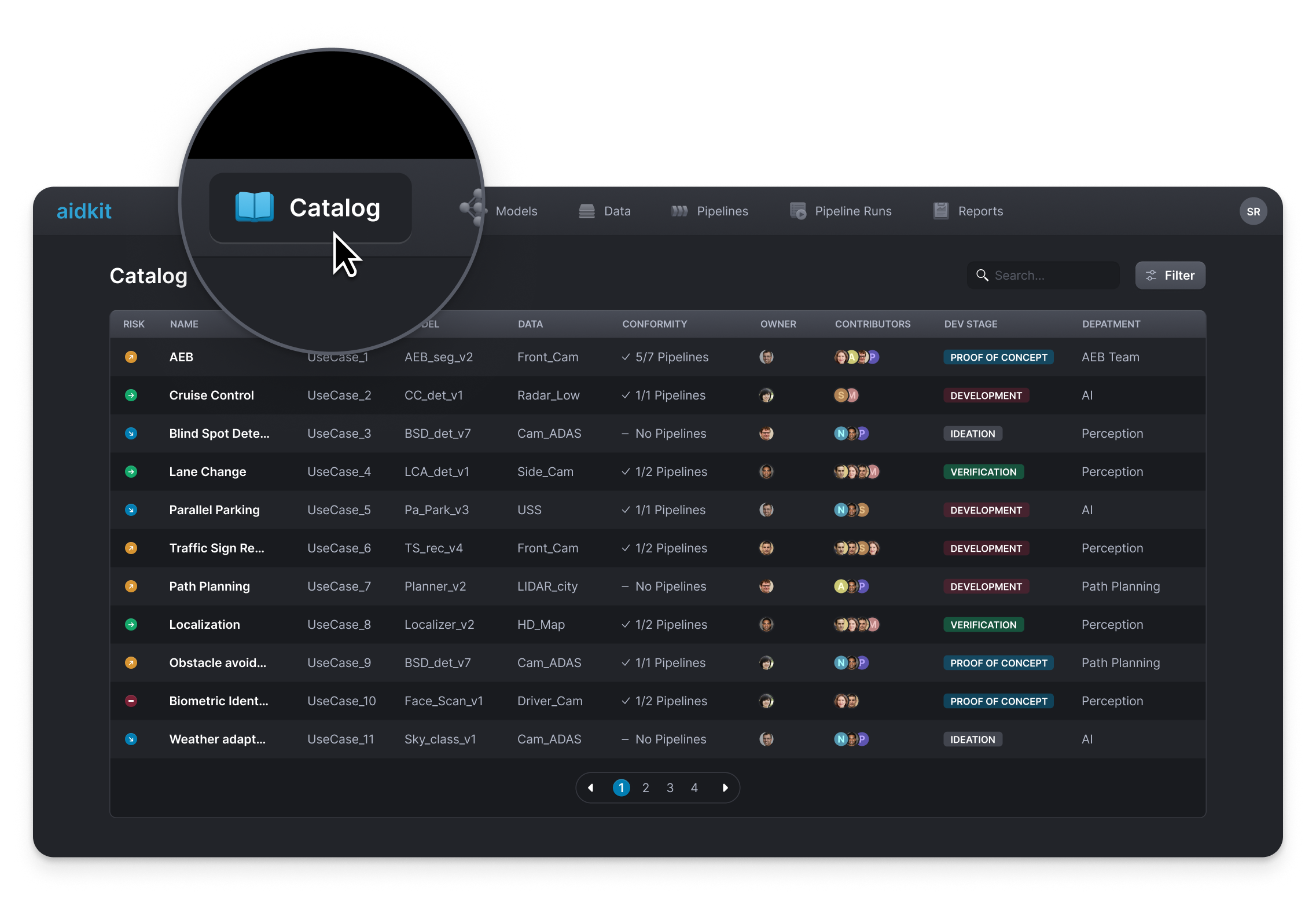

To comply with the AIA, you first need to know what you have and get all information on what you have in one place. We call this an "AI Catalog", a record of all your AI systems in development or deployed. Done in an organized fashion this catalog will help you assign risk classes to each use case, compile testing and technical documentation, and subsequently meet the obligations for each system based on its risk class.

compliant perception DEVELOPMENT

The aidkit solution: Requirement management right in your workflow

The aidkit AI catalog

Track your AI use cases right alongside your development work

The AI Act's requirements apply to every individual AI system a firm has deployed or that is in development. Obtaining a full overview of these, with different versions and sub-components spread across teams and constantly changing, can be time-consuming.

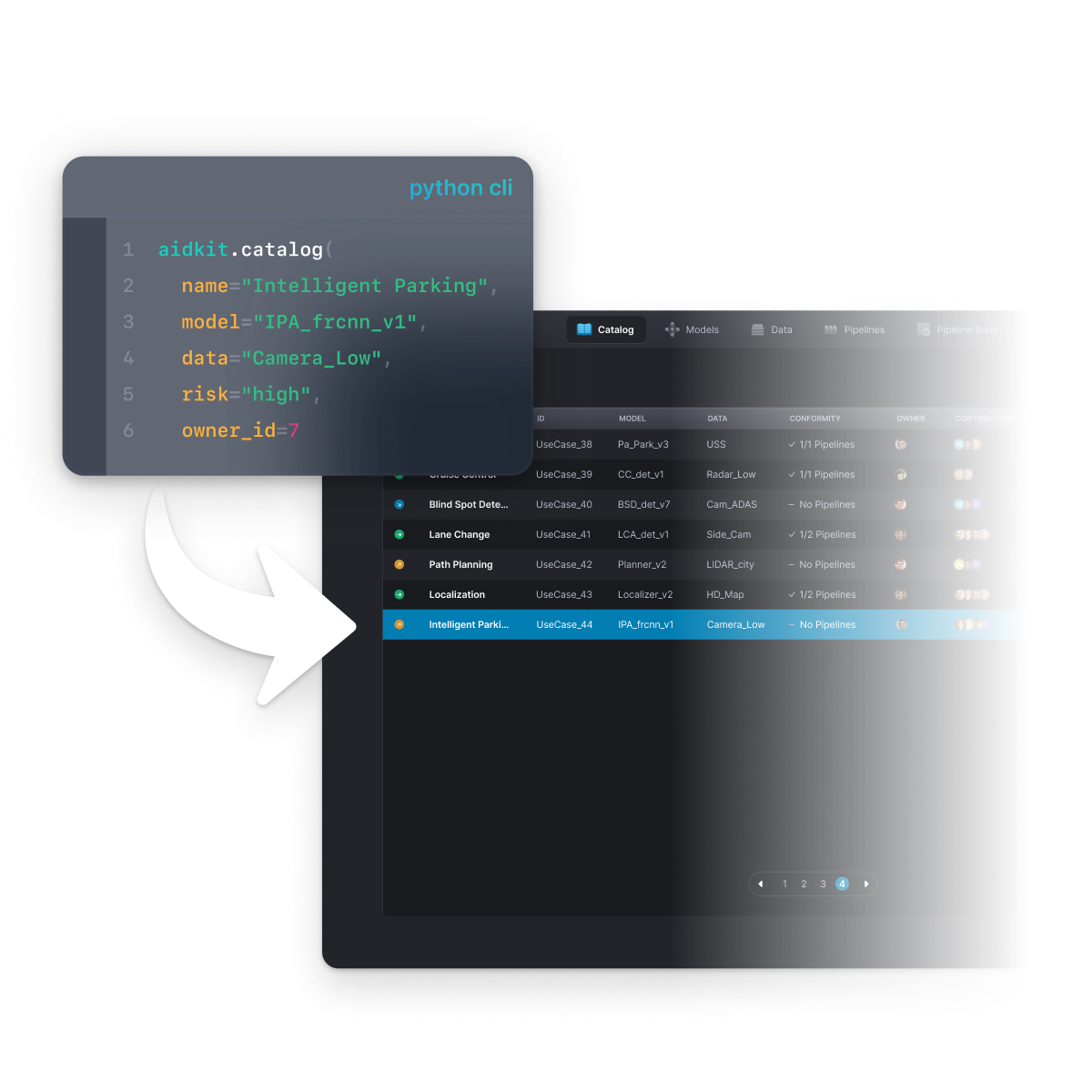

With aidkit's new Catalog feature, accessible right in aidkit’s dashboard, you can get an overview of all your AI use cases in one place. Moreover, because aidkit is firstmost a development tool, building and tracking your AI catalog occurs naturally in the course of your work.

The AI Catalog is linked directly to all the other tasks that one can already do in aidkit. From each use case you can access the models and data you imported, and the results of tests you conducted. aidkit ensures the integrity and availabilty of all the technical sources and outputs you need in order to document your AI development in your compliance process.

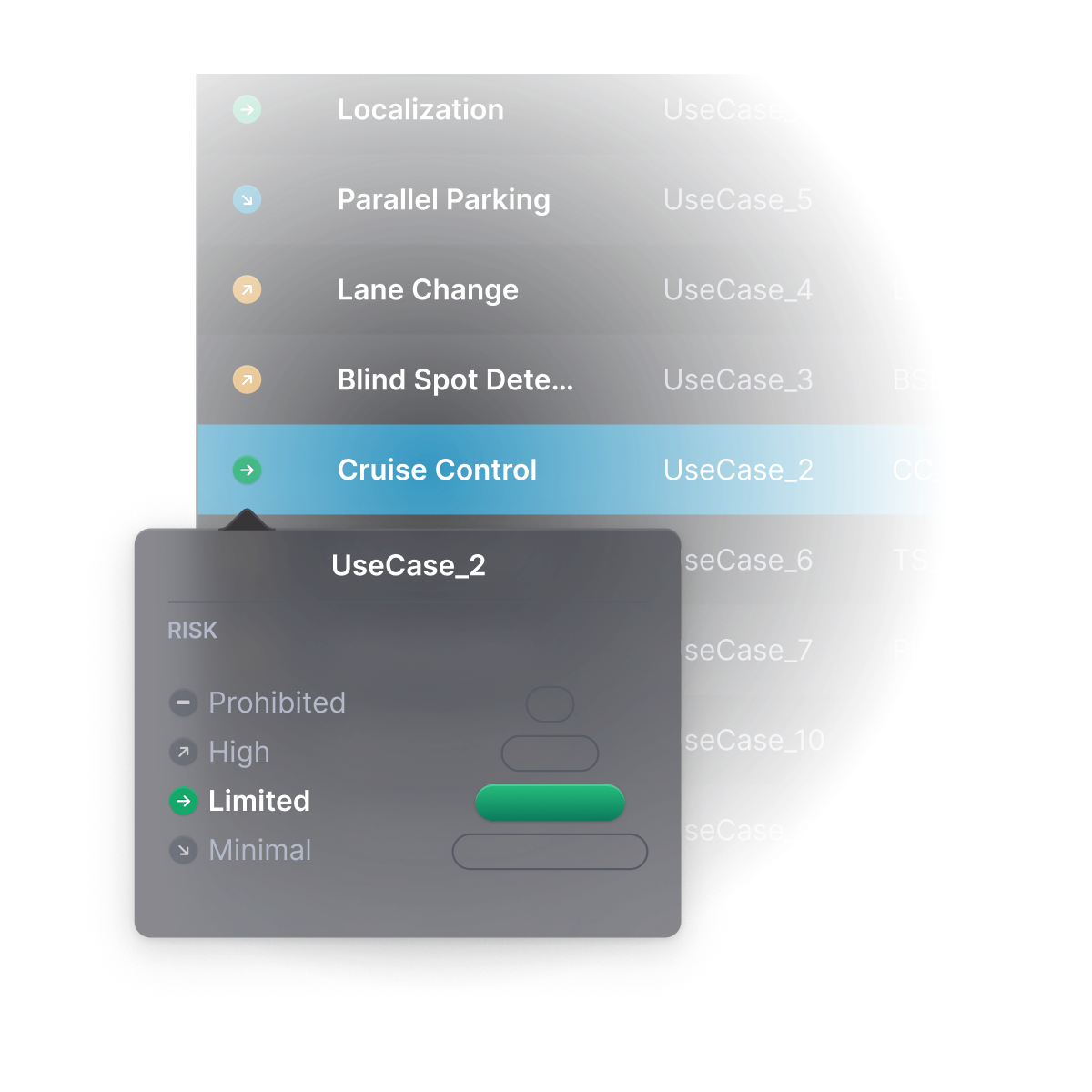

Risk management and consulting

Link your use cases directly to risk classes

The AI Act assigns risk classes based on an AI system's application. By positioning your AI use cases in a well-designed catalog, you can clearly identify which qualify as a “safety component” and are thus high-risk, as well as which applications fall into other classes.

For low-risk use cases, the aidkit Catalog itself - with all the linked source (models, datasets) and meta information - can directly be used to fullfill your transparency requirements. Thus, you can already meet half your obligations just by being organized, and not seperating your development work from your compliance management.

Yet the law has a qualitative nature as well, not reducible to scores and flow charts. The AIA annexes covering high-risk use cases can seem overly broad. neurocat’s dedicated standardization and risk experts are ready to assist you in assigning risk classes to all your applications.

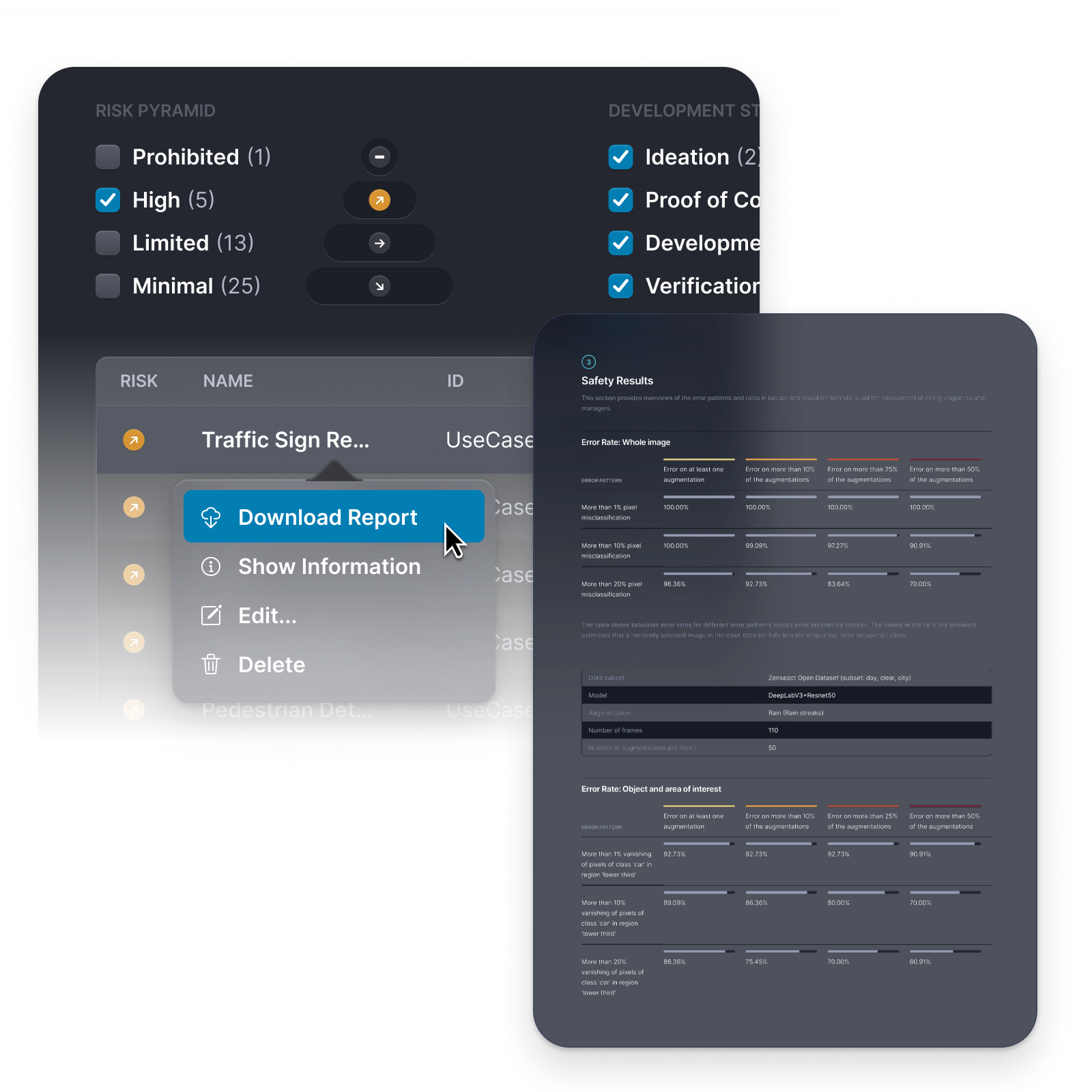

Conformity assessment

Compile your technical documents to prepare your conformity assessment

If an AI use case is classified as high risk, the AIA mandates a conformity assessment be completed prior to going to market, and be kept up to date thereafter. The conformity assessments will reflect standardizations currently being drafted by the appropriate industry relevant EU and related bodies.

These standards will, to the extent possible, reflect existing standardizations such as those of the ISO. neurocat has long worked with the ISO and other standardization bodies, writing, for example, the first AI quality standard (DIN SPEC 92001-1, -2, and -3) and we are contributing to the development of the upcoming harmonized standards.

We are now embedding this knowledge into aidkit’s existing robustness testing capabilities. Hereby, users are able to directly run testing pipelines for each use case, generating results in our new report format that can support conformity assessments.

MLOps integration and API

Integration ensures your compliance completes itself as you work

Most existing AI governance solutions introduce an additional hurdle by being management-oriented tools. They do not easily connect to existing MLOps and data platforms. Thus, there is a risk that the AI catalog within the AI governance tool is not up-to-date.

aidkit, on the the other hand, can be directly accessed and maintained via our Python client, which allows automatic tracking of machine learning models, data sets, and testing pipelines. Our Python client will soon be extended with the "aidkit.catalog()" method, allowing users to link models, data sets, and additional meta information to easily create an AI use case.

aidkit: Legal compliance at the speed of innovation

Together toward ethical, trustworthy AI

By tapping into the existing capabilities of aidkit for model and data handling, as well as robustness testing, "aidkit Comply" provides a use case management system that will ensure you don't overlook any AIA requirement. But this is only the first step in how we envision supporting our clients with their AIA compliance.

Next, we will work with our experts and clients to update our reports and other outputs in order to make submission of your records to regulatory and oversight bodies as seamless as possible, whether it be simple transparency or a full conformity assessment.

Throughout all phases of our technology roadmap, we want to make sure that we address the true pain points of your AIA compliance needs. Therefore, we encourage you to get in touch with us and participate in an open dialogue regarding feature requirements.

Help aidkit become not any governance tool, but the tool that gives your company a competitive edge in compliance so you get your AI to market faster and safer.

Driving safe perception

Continue your journey with us

Don’t just manage risks. Mitigate your perception system’s chances of encountering unknown situations and enhance its resiliance.