The security and safety of AI is something we all inherently recognize as important. Yet many of us don’t have a background in this topic and have limited time to learn new things or keep up on the latest developments.

neurocat is here to help. We recently contributed* to a new report by the German Ministry for Information Security “Security of AI-Systems: Fundamentals – Adversarial Deep Learning”. It is a wealth of knowledge, 288 pages of guidelines and research, old and new.

Until you can set aside time during your holiday to peruse this magnum opus, neurocat wants to provide you with a TL;DR birds-eye overview. Today we want to talk about “Chapter 1 – Best Practices” in an accessible way so that the whole team can be involved in securing your AI.

Thinking about AI security

Devising the best practices for securing your AI system involves first answering three questions about:

1. The nature of what you are seeking to protect (your AI).

2. The threats to that object (e.g. adversaries).

3. The vulnerabilities of your object that make a threat a real possibility.

Let’s look at each of these in turn.

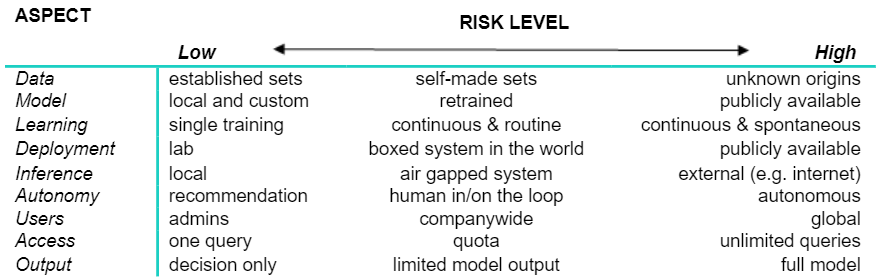

The AI you want to be safe and secure does not start as an AI, but as a collection of data and models that run on software and hardware. Later your AI may become part of a system and run on different hardware out in the world. To protect your AI-system requires thinking about each component individually first, then thinking about how these components interact.

Together these aspects determine your risk exposure, ranging from low to high as seen in the table above. A threat leverages this exposure as an attack surface.

To the security and safety professional, the world is filled with such threats. In security this threat is typically an actor who has intent and a goal, while in safety it is the world itself that is a scary place. How to prioritize these threats is a major question.

From the lab to operational-design domain (ODD, i.e. where your AI does its work) the nature of the threats your AI will experience throughout its life(-cycle) will change.

These attacks can be destructive or exploitative.

Destructive attacks often have origins in the development phase and impact your system into deployment. There are three sub-types of destructive attacks to consider:

Evasion – make the target AI make the wrong prediction

Poisoning – degrade the performance of the target AI

Backdoor – trigger a given output via a given input in the target AI

Exploitative, officially called extractive attacks, are usually seen during deployment and one could say (without casting blame prematurely) their origins are in poor practices and decisions in the development phase. These types of attack seek to extract information on either the:

Model – features, weights, etc., or

Data – reconstruct the training data used, membership inference, etc.

By knowing everything possible about our AI and the threats to it, we can see where these intersect as vulnerabilities. These vulnerabilities are the points of attack a threat will target, provided that by exploiting that vulnerability they can attain their goal.

Here one can see a major difference between security and safety: security has an extra intervention point – the intent of the attacker – which can be manipulated to reduce the probability of a threat. For safety functions, only directly addressing the vulnerability can reduce the probability of system failure. In this sense addressing safety is harder, but also results in more robustness than a security focused defense strategy (which often can be solved by inducing transference: you need only be a harder target than others).

Planning AI Security

With AI security 101 completed, how does this translate into some guidelines?

First, we know our AI’s environment is computer-based. We need to control that environment. Thus, following the current IT security best practices is your first line-of-defense. Measures should cover not only hardware and software, but also data and users.

Here we already see a problem: the environment will change. Thus second, you need to know what stage of its life cycle your AI is in so you can plan. The vulnerabilities and threats will be different at each stage. First this is because different components are more or less open and closed in different phases: early on your training data needs can be vulnerable, later on the inference data is the vulnerability point. Second, the environment will also change: at first your model will be in lab, but most models will eventually be in much more complex open-world environments.

Lastly, don’t just plan, test through red teaming. How many tries (iterations) does it take them to exploit a vulnerability? How sensitive is the model to changes (perturbation) in the input? How much or little knowledge did your red team need to degrade or exploit your AI? These exercises give you the information you need to fix vulnerabilities.

Operationalizing AI security

Now you are ready to adapt your AI development and deployment so that you can verify your AI is more safe and secure.

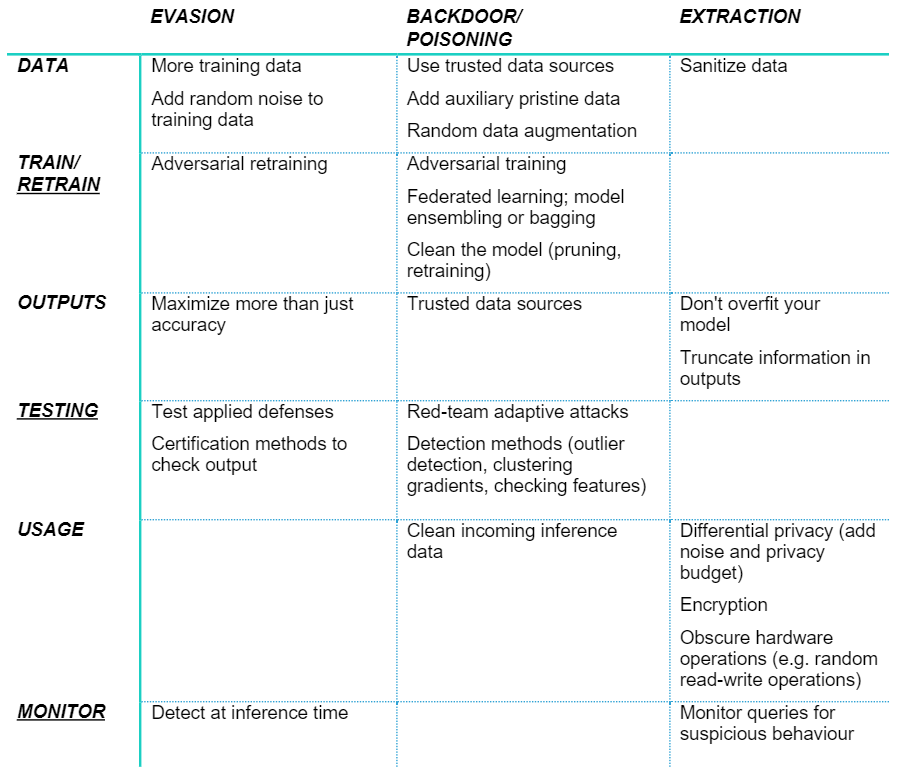

There are a range of techniques and algorithms available to improve adversarial robustness. However, your model architecture will constrain your options, as will your end use case and its requirements for, e.g., precision versus recall. Additionally, some techniques are useful only for verification, and not for direct improvement of robustness.

We’ve listed the major methods to robust-ize your AI in the table below. Don’t worry if you do not immediately recognize each method: having the vocabulary and background to ask the right questions at the right time is the first step.

Looking at this table, one can see that every other category (underlined for ease of identification) has something in common: retraining, testing, and monitoring. These are all points to reassess the security and safety of your AI-system, then to go back as needed to re-plan and adapt.

Tradeoffs and Defense-in-Depth

Astute observers will have noticed a problem with the above list; that some elements of a good defense conflict with each other or with the operation of the deployed AI-system. Indeed, this is a core principle of security: it is about managing tradeoffs.

All defenses and methods to increase adversarial robustness come at a cost of time, computational overhead, and/or sometimes even performance (e.g. accuracy).

This is why it is critical to understand the threats given the vulnerabilities particular to the stage of life your AI is at. It allows risk management and mitigation, as perfect defense and complete safety are impossible.

Rather, the best practice is defense-in-depth using different techniques at each stage of the AI-system’s life cycle and as the information travels through its different states. This way if one defense proves insufficient, there are more lines to get through in the next stage and you will have no single point of failure.

Securing your AI thus is not just about being a hard target, it is also about being a moving target. Just as your AI must adapt to its deployment environment, you must adapt to ensure its safe and secure operation no matter what the world throws at it.

* Other project partners were Fraunhofer AISEC, Fraunhofer IAIS, TÜV Informationstechnik GmbH – TÜViT (TÜV NORD GROUP).