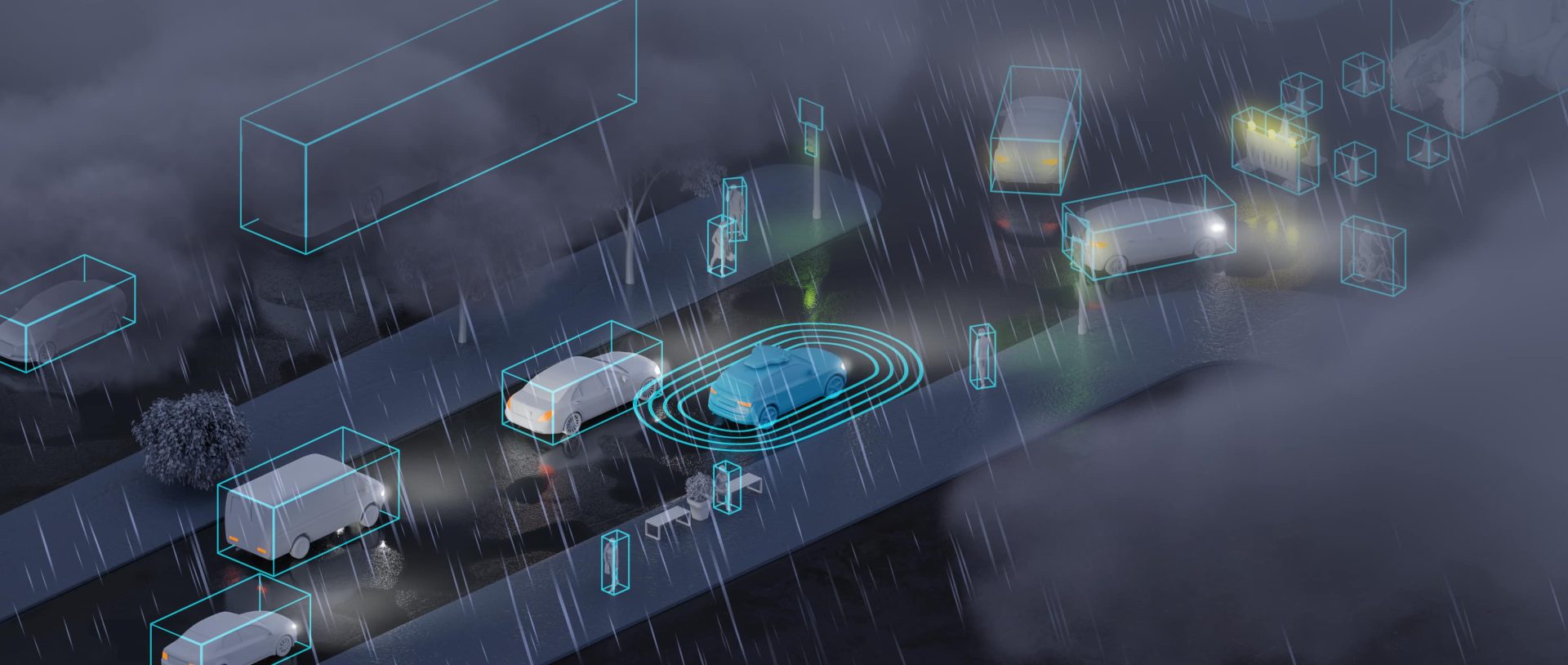

Use case: Testing perception performance in demanding weather condition

Overview: Client and task

When the perception team of an OEM came to neurocat in need of advanced testing capabilities, we were able to offer the comprehensive solution they needed to move from the early development stages to the next level.

This use case summarizes our work with that client in ensuring an object classification model correctly classified road signage in various rainy weather conditions common in the ODD.

Using our aidkit software the client was able to leverage augmented data in order to test for safety and data gaps in a way that specified those gaps in detail so they could be filled in a lean, targeted manner.

Hereby, the client was able to identify its best Machine Learning (ML) model and organize steps to improve its performance so that it met internal safety and technical requirements.

The problem

How to validate safe areas of operation in an ODD and identify unsafe areas to inform data campaigns

The client needed to test several candidate perception models to understand their performance limitations and vulnerabilities. Their aim was to identify the best model and validate its safety within an ODD that included variations on wet-weather conditions, from mist to downpours. The client was uncertain if they had collected enough images of each condition, or captured the overall distribution of possible conditions, to properly train and test their models.

The client approached neurocat for a solution so they could first validate safe areas of operation in a time-efficient manner, and second, get a clearer picture under which conditions their model's performance did not meet given technical performance requirements. In this way they planned to design a lean data campaign to collect only images of likely relevance to critical performance weaknesses and do focused annotation on these.

Analysis of problem-solution

Customization and integration of aidkit for client's needs

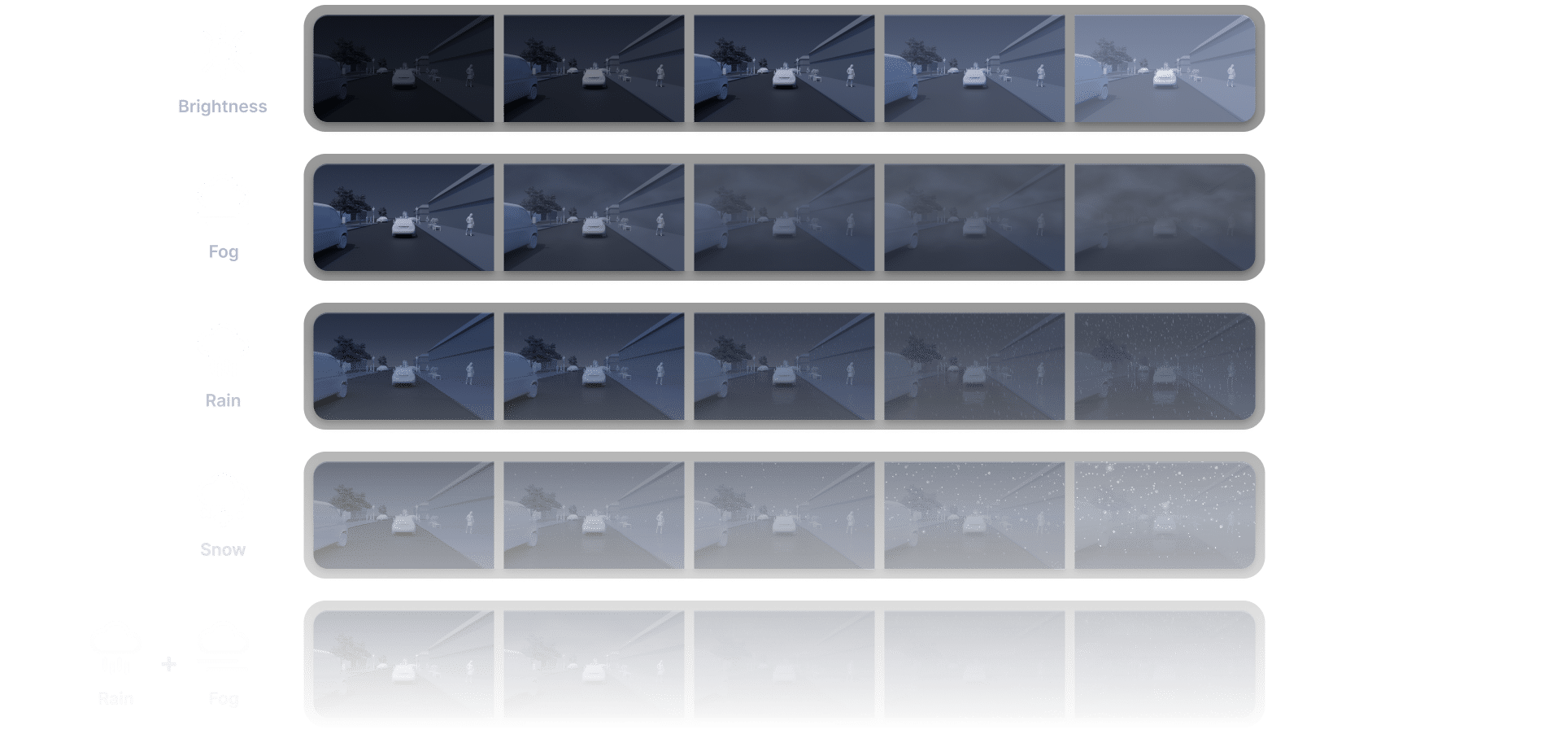

Because the client was under time pressure, we recommended a scalable aidkit version. Analyzing the client's ODD meta data we identified not only lack of rain variation but also a lack of brightness variation. Thus, suitable data augmentation techniques were added to the customer deployment. Lastly, we analyzed the customer's images and adapted our corruptions to mimick the customer's setup (camera type, position, speed, etc.).

Every AI model is unique and requires a unique solution. Thus, to supplement aidkit's off-the-shelf capabilities, we consult with clients for customization in the early stages, plus provide analytical support in the later stages as requested. In this case consulting with the client in advance and drafting our solution took about one and a half weeks.

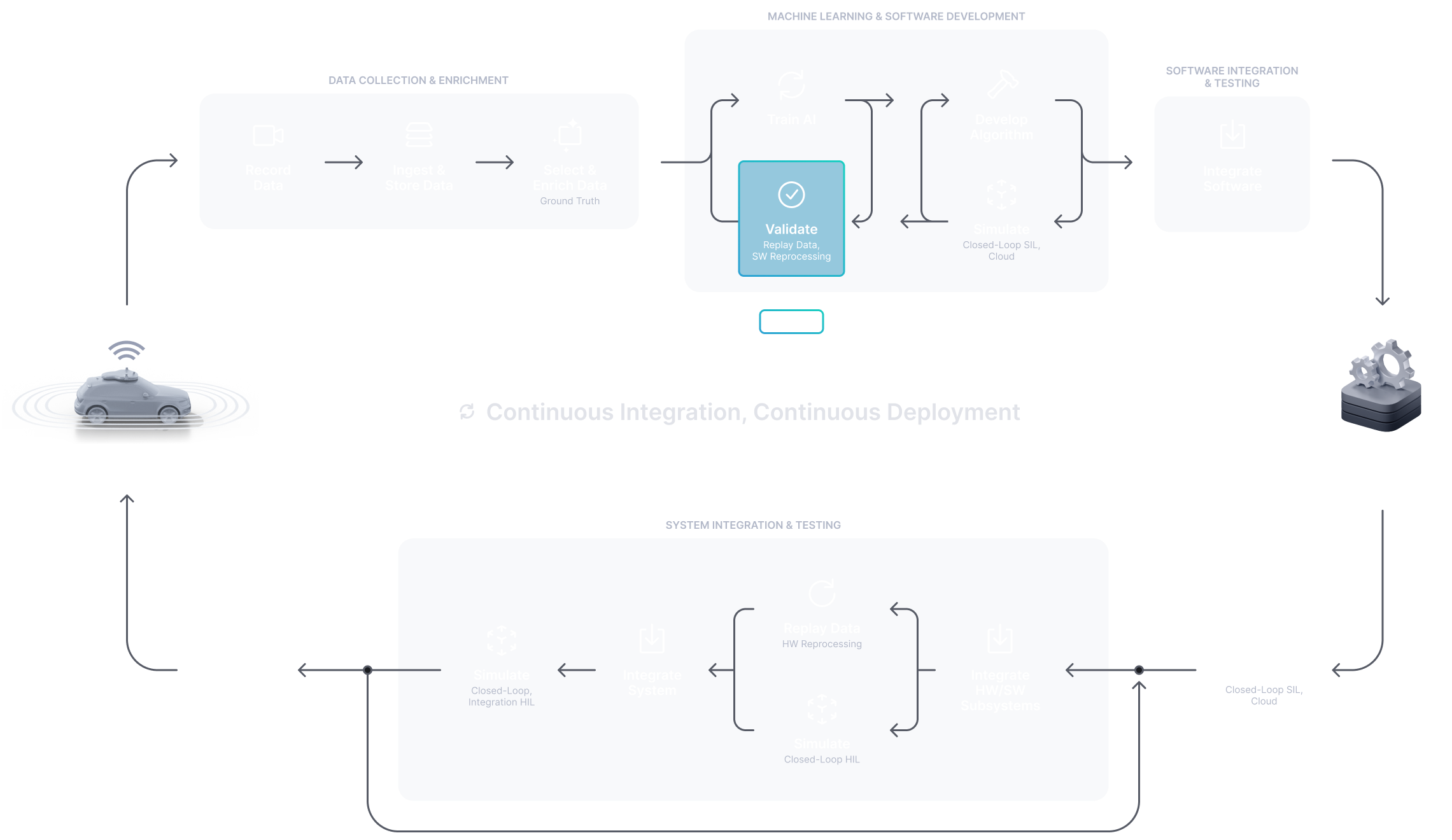

In cooperation with the client’s safety manager we defined technical requirements for the perception performance, which included failure rate and augmentation intensity metrics, as well as related threshold values. The integration of aidkit into the customer's existing MLOps environment did not need any further customization and was completed within several days.

The solution

Multi-model comparative testing with augmented re-training data

Over a three-month development cycle, the client used aidkit to test thirty different candidate models. Using the results provided they designed a lean, targeted data collection to improve tne best performing model. They also decided to utilize the augmented data to retrain its models.

The testing enabled the client to identify the best model, which performed above the mean model performance across most ODD scenarios. Retraining with the new images and artefacts further improved model performance by ~10%. The model now met the agreed upon requirements and was moved the next development phase.

01

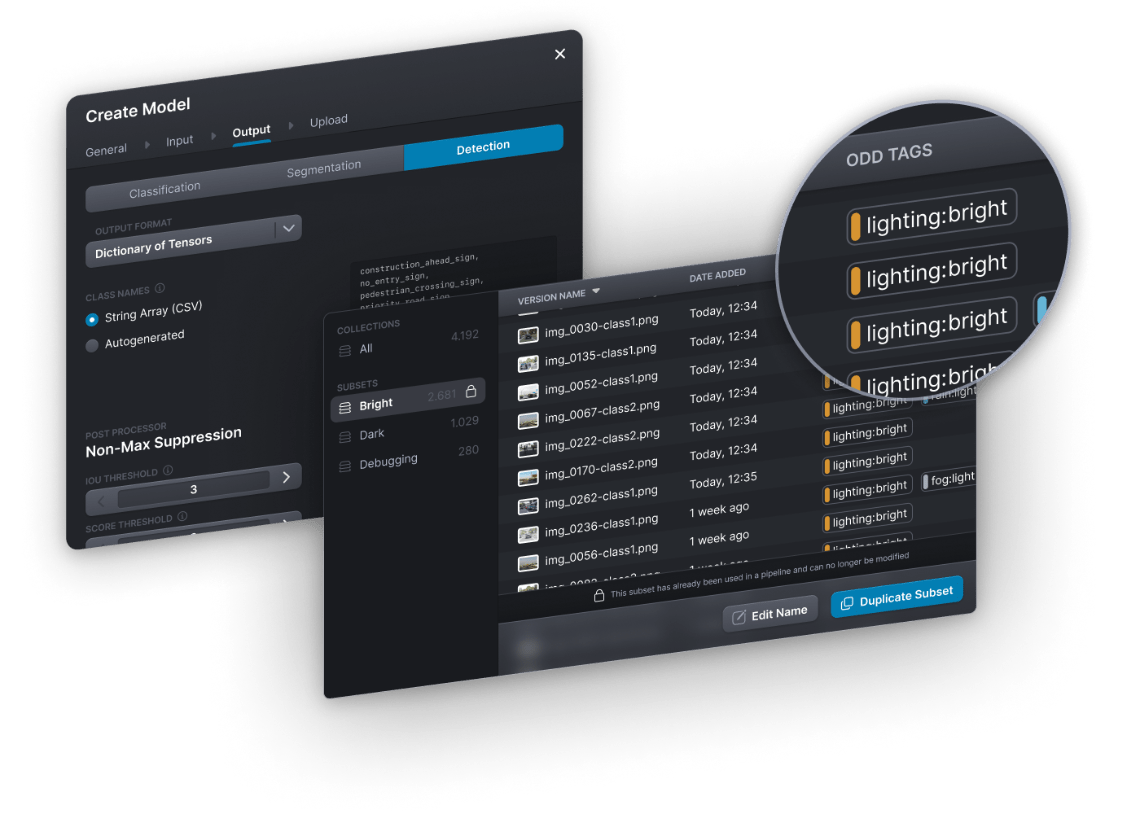

Loading models and data

The client trained a variety of candidate models for traffic sign classification tasks using PyTorch. Candidates differed in training parameters and architectural elements. The trained model graphs were uploaded using the web interface. The specifications of models and data were quickly added with the drop-down menus. Next, output classes were added via a CSV file before confirming final model upload.

Subsequently color image data, consisting of the already collected annotated test data, was uploaded to serve as the basis for the augmentations. Data was stored securely on a shared AWS instance. The client created three subsets of the testing data based on ODD tags not related specifically to wet weather (wind, brightness, and glare).

02

Setup and configuration

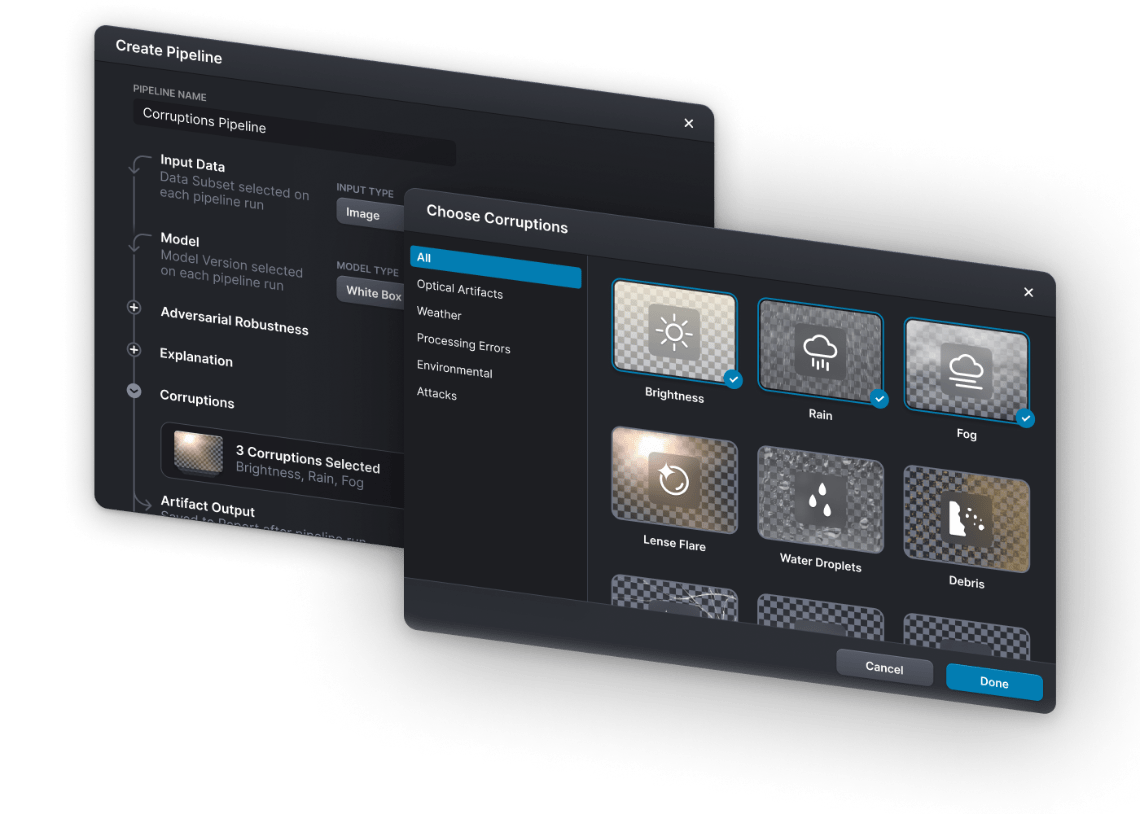

Next the client set up the pipelines for the different data augmentations they wanted to test models against. Corruptions for rain were included and all default evaluation metrics selected (e.g., L0, L1, L2, structural dissimilarity, etc.).

After setting up the pipelines the customer moved to their MLOps environment for testing (accessing aidkit with their authentication token via the Python client). The client used the Azure ML platform, for which neurocat provided appropriate integration scripts.

03

Running the tests

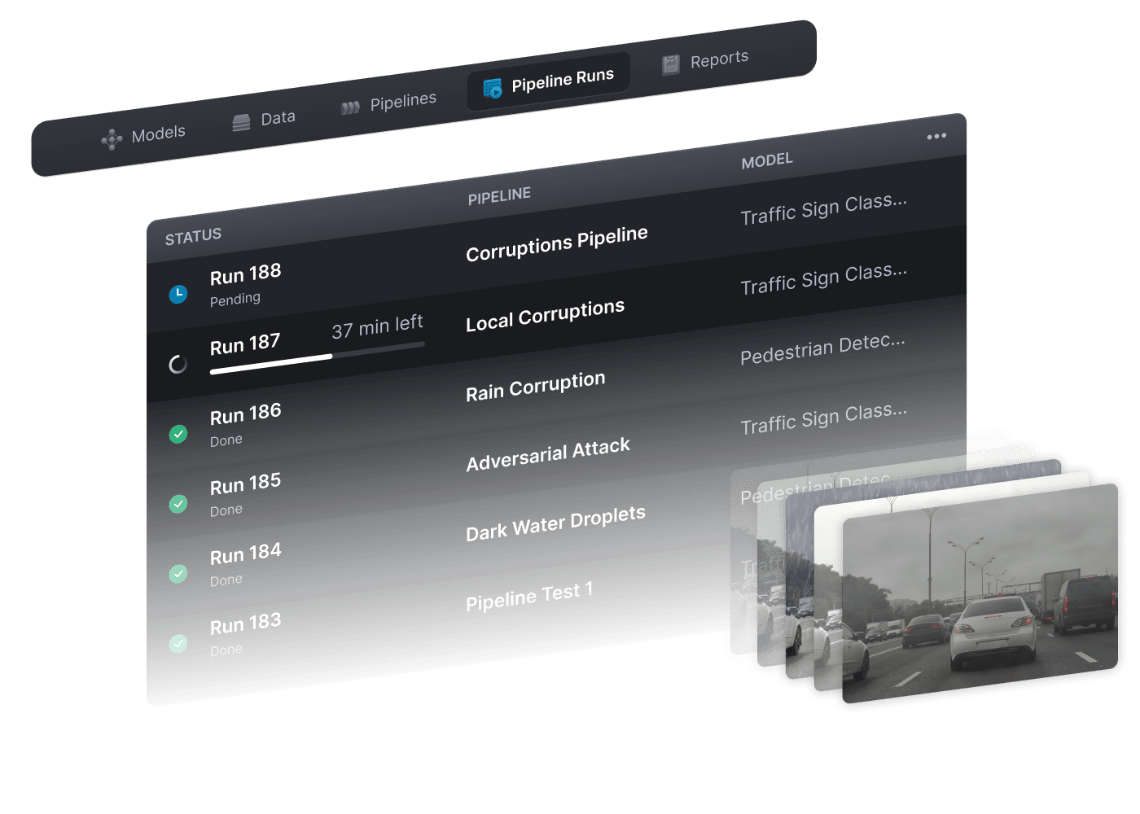

Tests were run with horizontal scaling using 64 vCPUs. Total time for each test ran between 30 minutes and 3 hours. Each model was tested against images augmented with the corruptions selected for that pipeline of increasing intensity. In total an average of 750,000 augmented images (10,000 images, 10-15 corruptions, 5 intensity levels) were generated for each test (varying by pipeline).

After a test completed a new model version was introduced into aidkit via the Python client, allowing the customer to continuously monitor the robustness of new model versions (in Azure). After a pipeline finished, the customer reviewed the report that compared the models, indicating which of the model versions showed the highest robustness according to the methods specified in the robustness evaluation pipeline.

04

Reviewing the reporting results

As quality assessment of perception models can be complex, we supported the client in this process. Together we determined candidate model two outperformed all other candidates. The comparison plot of Model Failure Rates (MFR) showed this clearly, with candidate two having an MFR of 17% at medium rain intensity.

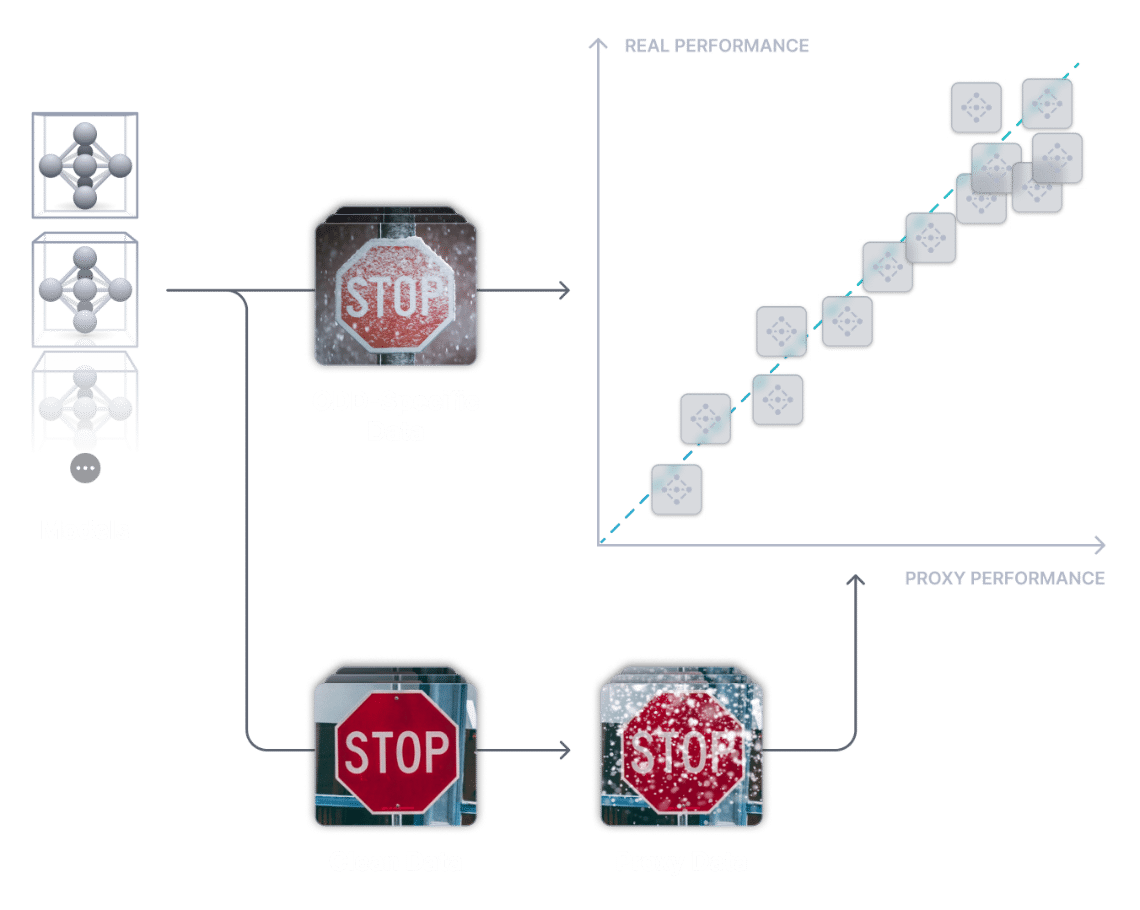

However, the model still failed to meet the robustness level agreed with the client, which was an MFR of 10% at light and medium intensities. The MFR in bright lighting conditions was especially problematic, averaging 21% for both medium as well as light rain. Experiments to evaluate the validity of the augmentation methods confirmed the performance on the augmented data points correlated with real-world performances.

05

Supplementary collection and iteration

Based on the results candidate model two was selected for further development. The client designed a data campaign to collect and annotate images matching the conditions where this model failed. These images were used for model re-training and, subsequently, to re-test the model in aidkit. Once no more testing was required the client retrained the model using the augmented data to further boost performance.

As a result, the client was able to push down the MFR of model two in the two conditions of concern – medium rain intensities and brighter lighting conditions in both light and medium rain. The new numbers were above the level established with the safety manager to prove the removal of unreasonable risk within the ODD.

Outcomes

A safe perception model on time and under cost

By testing their ML perception models with aidkit the client was able to quickly identify its best performing model given the ODD. They were also able to identify specifically where this model underperformed by using augmented data to expand their testing. Using this information the client could efficiently allocate resources for additional collection and labelling in a way which saved time and reduced costs while maximizing the training value.

Subsequently, the client was able to use this new data to re-train the model and validate its safety with additional aidkit tests. They then used the augmented data for further retraining to boost model performance. In doing this, the client validated safe performance in a much wider range of conditions than would have been possible with only real-world data.

Driving safe perception

Continue your journey with us

Don’t just manage risks. Mitigate your perception system’s chances of encountering unknown situations and enhance its resiliance.