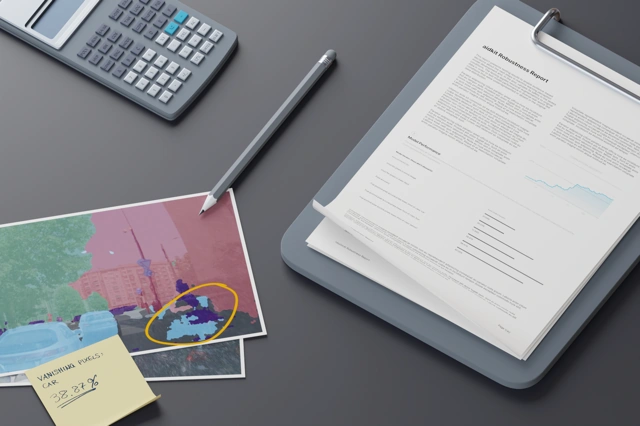

aidkit is the comprehensive solution for ML perception

aidkit's data augmentation functionality is built for you to use how you want. Its full testing suite is there to help validate your safety; its augmentations are ready to be exported to enhance your models' performance.

aidkit's purpose is seamless, scalable data augmentation that breaks you out of endless development loops and gets your ADAS/ADS perception system on the road to deployment.

No matter whether you need data and testing, or data for training, aidkit is in your corner. Click on your use case below to learn more!

aidkit and more

Discover more features and extensions to aidkit

At neurocat we are always keeping an eye on the market and our customers' needs so we can add new features to aidkit or create extenstions for new tasks that arise in the fast evolving field of ML development and ADAS/AD applications.

Driving safe perception

Continue your journey with us

Want to learn more about the above use cases or have your own unique use case? Get in touch with us and start moving your perception system development forward.