While we at neurocat create many types of data augmentations, we are especially proud of our rain augmentation. Rain is complex and diverse. Realistically capturing this requires creative thinking and tricky implementation. So today we are going to discuss this complexity, and how we deal with it to create a distribution and diversity of rain data more representative of the real world than reality itself.

About the rain

You do not need to be told that rain comes in many different forms. Take for instance the three types of rain in the image below.

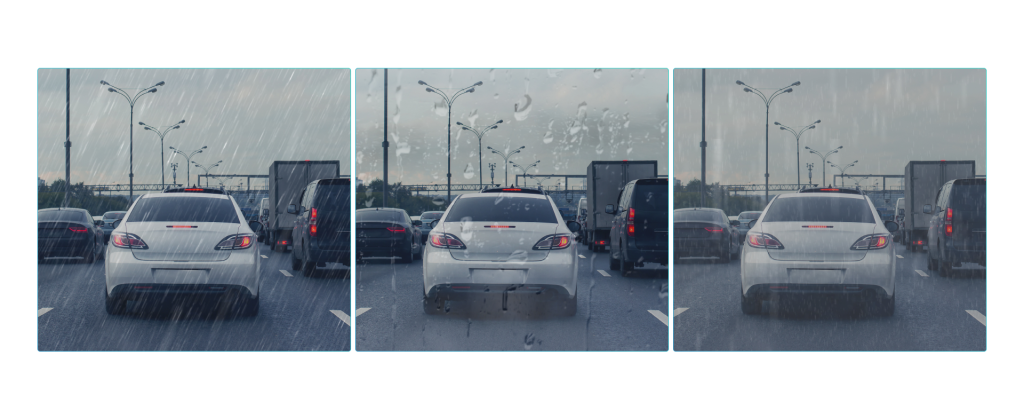

Often, when we want to see if our perception models fail, we think of it being robust up to a certain intensity of rain. So which of the pictured rains is most intense? Likely you said image one. Now look at the images below and ask the same question?

Now its harder, but you may now be leaning toward image three. But it's only drizzle! How can that possibly be more intense than the downpour in image one?

The answer is a complex equation that considers size, frequency, and dispersion of each droplet of rain. Yet even these objective facts about the rain are not enough to classify and capture it. So let's look at what it's like being out in the rain so you can ensure your perception model is trained on data reflective of that.

Being in the rain

The aforementioned diversity and complexity of the rain is magnified by an important additional factor. Look at the images below.

Which is most intense? Actually, the rain has not changed at all: its the same rain in every image. Something else has changed: the camera's position in the rain and its direction and velocity. The latter are important, since your camera will be in an autonomous vehicle, one that is moving at speed from one place to another.

This speed and direction is, however, relative. The way the rain appears in the image depends not only on the camera/vehicle, or on the rain, but the interaction when these two moving objects meet.

Let's turn to the outcome of this interaction now, outlining how we model the rain in a way which makes each drop unique.

Making it rain

At neurocat, we have thought long and hard about the complexity of the rain. Now a machine learning model does not care how your augmented data looks. That is, it does not matter if the rain looks real to a machine as long as the augmentation captures the mathematical nature of the phenomenon.

Nonetheless, our goal is to create augmented data that looks real to machine and human alike. Safety has two sides: objective and subjective. A visually accurate augmentation builds trust in the machine's findings, allowing for a special human form of validation.

Let’s take a look at how we create three types of realistic rain images – streaking, droplet, and composite – that satisfy both statistical and visual validation criteria.

Rain streaks

Rain streaking across your image is our most “classic” augmentation. To produce such streaks – what we call strokes – we first sample rain drops in a 3D space. Each drop has a size and a trajectory that follow a normal distribution (e.g., typically mostly similar to one another, with only a few outliers).

This is enough information to calculate the stroke size, as it correlates with drop size. But to get stroke direction and length requires also information about the camera and the car.

Computing stroke length requires knowing the shutter speed of the camera along with fall speed of the drops.

Lastly, stroke direction is calculated by considering the camera direction and field of view, and the speed of the vehicle relative to the natural fall direction of the rain.

Rain drops

Our rain drop augmentation simulates how these drops adhere to the camera image. As above, we have to consider the camera, vehicle, and rain itself to create this augmentation.

The most important factor about the camera is its mounting position relative to the protective glass on which drops adhere, e.g. the windshield. This determines how large the drops will appear on the image and how strongly they are blurred.

Regarding the vehicle, we have to consider its speed. This affects the orientation of the drops, which will run downwards if the car is stationary, but can move outwards or upwards for a vehicle in motion.

Now we can turn our focus to the droplets themselves. The number of raindrops is determined through a rain density function. The sizes of the raindrops can be set to depend on how severe we want the corruption to appear, with some natural variation.

With these criteria we can then create the augmentation by sampling raindrops of different sizes and shapes and overlaying them in random positions on the image. As a final flourish, distortion and reflection effects, e.g. fish-eye-like reflections of the image in the drops, are added.

Composite rain effects

Our most advanced augmented is a rainy image augmentation that combines multiple effects such as a reflective street, rain spray, and blur in order to achieve a realistic composite effect. Creating this augmentation includes several steps.

First, we capture the gloomy colors of a rainy day by desaturating the image, making the colors less vivid. Subsequently we blur the image to simulate to the wetness of the camera lens (even once any drops have dissipated).

Next, usually we make the sky overcast for these particular augmentations, even if it is sometimes rainy and sunny at the same time.

To further tweak the environment, we add wet reflective streets and spray coming off the street from other cars in the image.

This corruption is typically heavily tailored to the client's needs, accounting for their particular camera configurations and environments, as well as requiring segmentation maps. But the value of the data obtained is well worth the effort.

Conclusion: Why all the effort?

Why does this matter to you and your autonomous vehicle's perception system? As you know, you have to train your perception model on data. That data should reflect all the possible conditions your vehicle will encounter in its ODD.

But as we have discussed before, the environment can be uncooperative and collecting the full distribution of possible conditions your vehicles need to recognize can be difficult to impossible. Rain complicates perception functions, and every type of rain complicates them in different ways.

So ask yourself: are you confident the weather during your collection will perfectly reflect the overall multi-year distribution of weather in your ODD? Do you want to wake up every morning and have the weather forecaster tell you how your data collection will go today?

If you answered “no” and want to take control of your data campaigns, then you should look into using augmented data tools like aidkit to capture the full diversity and distribution of conditions within which your autonomous vehicle needs to operate safely.

Equally important as human visual aspect to check validity is statistical proof. Check our blog on how we conduct experiments to verify our augmentations reflect reality.