The testing of a perception model is an underappreciated step in ADAS/ADS development. With time-consuming collection and complicated model training done, confidence in swift validation and the enthusiasm for integration and deployment are high. Yet the reality is that testing can also involve a lot of time and complexity, more so for the expansive Operational Design Domains (ODDs) and high safety standards pursued by ambitious developers. This is where scaling rides in to save the day.

How we test

We have discussed before why we test our perception models prior to system integration. So let's focus here on how we test, hereby highlighting the need for scalability. To concretize the discussion will use our own software aidkit as an example of a testing tool.

Testing your perception models requires, quite obviously, testing data. This data could be real, augmented, or synthetic. You can read about various merits of each type of data in this blog. Here we will focus on augmented data, with comparison to real data as the baseline with which most perception developers are familiar.

For testing, functionally we need images unseen by our machine learning (ML) model that reflect the ODD conditions and distribution of those conditions within which we want to ensure safe operation of the perception model. That is, if we want to test for safe operation in the rain, we need new images of rain of all the intensities relevant for our ODD.

Additionally, two non-functional factors will matter for our testing: cost in currency and cost in time. Once you reach the testing stage, you will be well aware of the cost of real-world data collection and labelling. So let's look at how you would test with augmented data and what some costs would be. Then we can compare real and augmented data and how to scale testing with each.

Why scaling matters

Our aidkit tool tests perception models using augmented image data. But while aidkit works at the image level, the perception systems it is testing are built on collections of images in rapid sequence. For proper testing aidkit needs to go through these images because, just like a person would not open their eyes for a second and be sure of what they saw, perception models are more confident if they see, for instance, several frames per second of a video.

Moreover, localization, planning, and prediction rely on the differences between the images in a sequence. aidkit may be focused on the sensing part of a perception system, but it is built to ensure this sensing support all other ADS/ADAS functions. For instance, as a car switches lanes, its profile changes. aidkit wants to ensure your perception works also during such maneuvers.

Thus, we already have a number of images equal to the desired frames per second times the length in seconds of the data you want to test. aidkit then augments this training data you've collected (or a sample of it based on the ODD you want to test). These augmentations are used to obtain statistically significant confirmation of robustness (or lack thereof).

It is with this testing that the number of images starts to explode. You may upload 10,000 images, but aidkit will apply augmentations to each of these. The more robustness to different conditions (such as varying intensities of rain) you want to obtain, the more augmentations you will need.

Moreover, you cannot do each augmentation just once. Sample size equals 1 is not exactly the route to statistical confidence. Just like you would need many similar real rainy images to be sure your model can handle that type of rain, you need many augmentations for such certainty.

Here, time enters the equation: it takes time to generate every augmentation. The amount of time per augmentation depends on the complexity of that augmentation. Augmentations that close the simulation gap both mathematically and visually, as both are important, tend to be complex.

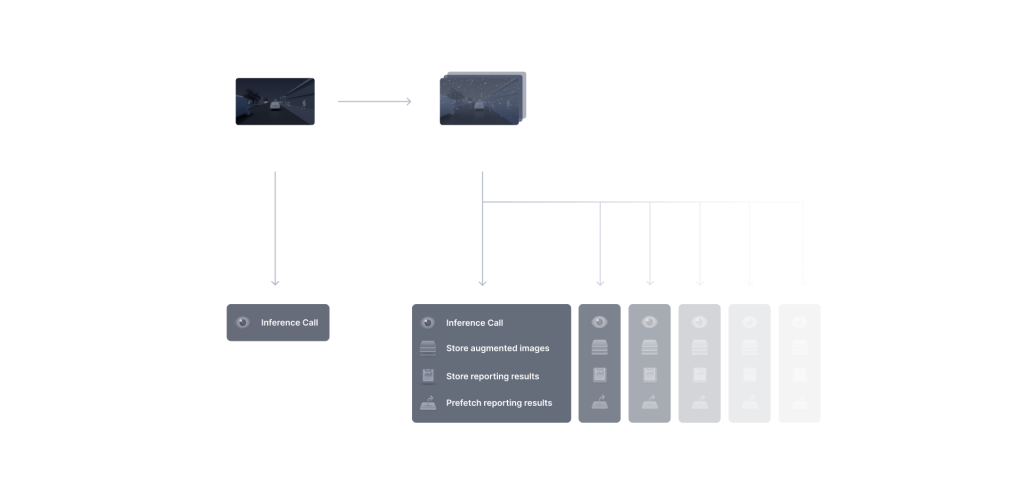

Once we have generated augmentations, we need to make an inference call to our model (or models!) for the original image and every augmentation of that image to see if the model correctly performs its task (e.g. segments, detects, or classifies). Each inference call takes time, and the bigger the model, the longer the inference time. Perception models for higher levels of autonomy will tend to be big.

Lastly, we have to store the image and reporting results. For image storage your testing tool needs to make intelligent decisions on what to store, or it must store everything, which will take time. Results need to be presentable individually and aggregated and should be prefetched so when you need to see them it does not take hours (remember, you ran a lot of tests!).

We've now reached the end of the exponential (or, to be precise, quadratic) growth in testing requirements. So how long will the tests take?

The scale of testing

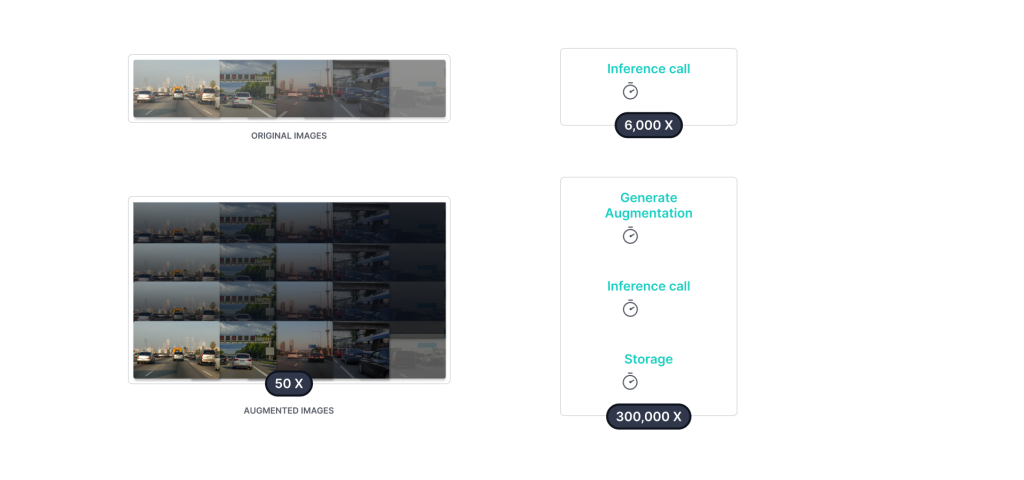

Let's say we have 6,000 images (chosen as multiple of 60/30/15 for ease of thinking in common frames per second). Starting with just 1 augmentation per image, we can assume we want to do it 50 times for statistical significance. This gives us 300,000 images to be generated. Each of these and the original image then need an inference call for the testing, meaning 306,000 inference calls.

While computation times vary and can be optimized, for a decent code base running in a capable environment, a complex visually appealing augmentation takes about .5 seconds to generate. An inference call to a model will take at least .2 seconds for a decent model. Storage of the generated image then takes .4 seconds. Thus, if we run everything sequentially, it would take 92 hours, or almost 4 days to run our tests.

But the runtime per image is not the main issue, thus optimizing the above tasks for faster execution is not the main problem. Rather, it is the combination explosion that results from the need to test your perception model in all relevant conditions and scenarios for your ODD. To ensure all unreasonable risk has been removed requires hundreds of thousands of original images, leading to millions of augmented images, and the need to generate, call, store, and manage the results of these. Faster compute is not the solution; horizontally distributed compute is.

The complexity of scaling

We see from the above how important scaling is. So how do we scale and how hard is it?

First, let's touch on scaling with real data. Scaling with real data is easy but expensive and, moreover, may not provide the data you need. The problem with real data is the long tail (of the distribution): it is difficult to collect the rare data points (images), and yet these data points are often the most safety critical. Scaling real image collection thus requires more vehicles on the road more often, including in unsafe operating conditions so you can catch those edge cases.

The other option is to augment your existing data in order to get more variety in that data. The advantage here is that you can get what you need: need heavy rain, that is what you get. Scaling in this way also means you can scale "collection” (of augmented images) and testing at the same time.

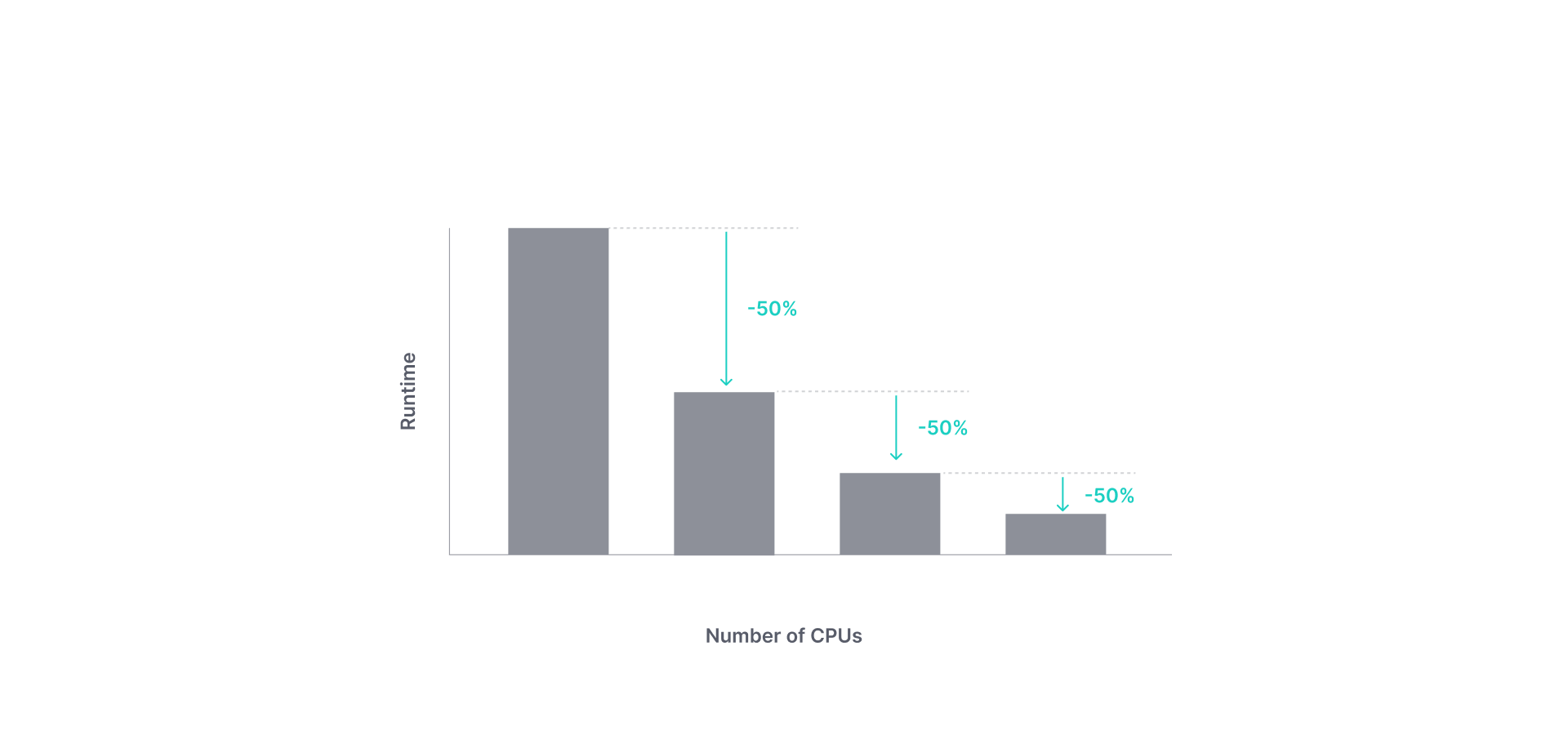

Scaling via augmentation is also cost neutral. When you scale by adding compute the costs remain the same. Scaling means you are running your tests across multiple processors but taking less time. Provided your testing programme is well built, this relationship between number of processors and time to completion should be very nearly linear: if 1 processor takes X time, 2 should take half of X time.

It seems like a straightforward choice then: scaling with augmented data has all the advantages from a time, cost, and completeness perspective. Yet just above we hinted at why this straightforward choice becomes difficult: the programme must be well built, not just fast, but stable. Splitting up augmentations and inference calls over different servers is not trivial. It requires a lot of coordination of different compute tasks, management of compute resources, communication between servers, and so forth.

The methods and means to implement scaling are not a secret, but they are incredibly time-consuming and costly to replicate. It also represents a pure development cost, meaning once you deploy there is no more use for the tool you developed (or much more limited use, since you will fine tune your models over time).

aidkit: Testing at scale

Engineering a perceptions solution is hard enough and your team should not have to engineer a testing solution as well. But we can see from the above, unless you want to leave money lying on the street and can accept delays in reaching your destination, you need a well-researched and –engineered solution for testing at scale.

Thus you will want to consider acquiring well-built testing software. This software will offset its own cost by reducing costs in other areas such as collection, labelling, and safety validation, as well as by ensuring system integration and development proceeds quickly and unhindered.

Well, guess what (surprise surprise): we have the solution for you! Want to read about it in practice, check out this use case, or if you want to broad strokes first, read more on our solutions page. Five minutes of reading now could save you 5 months of testing later.